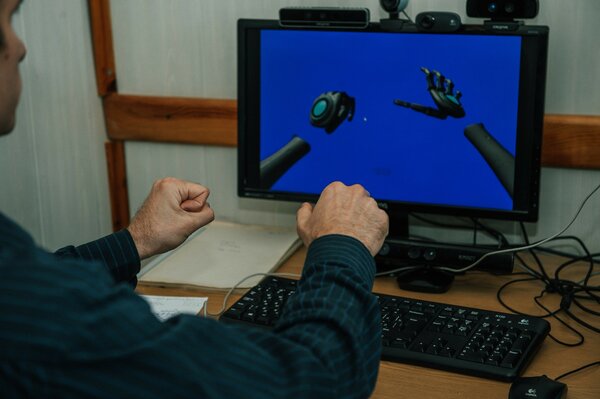

Scientists from the Novosibirsk State Technical University NETI have developed an artificial intelligence-based system for recognizing sign language. Using cameras and neural networks, the program understands the language of deaf and dumb people and translates them into text or voice. According to the developers, the gesture recognition accuracy is almost 90%. As the publication “Scientific Russia” writes, this is the first software of this kind, there are no analogues in the world.

The system is trained in 1006 gestures, is able to determine the individual components of a gesture, its localization, palm orientation and the nature of movement. Professor of the Department of Automated Control Systems Mikhail Grif noted that work is now underway to recognize the letters of the dactyl alphabet (the accuracy is 92%), as well as intermediate (inter-gesture) movement. The neural network has learned to determine the beginning and end of a gesture. By the way, Alexey Prikhodko, the world’s only deaf programmer and an expert in developing a system for translating Russian sign language, stood at the origins of the program.

Scientists intend to create a computer translator in both directions and teach the system to other sign languages of the world. Work in this direction will ultimately lead to the creation of a simple and convenient application for communication with deaf people.

There are about 130 sign languages in the world today. Each of them contains about 3-5 thousand gestures, which are made with the help of hand movements, facial expressions and body position. According to available data, the number of sign language speakers in Russia exceeds 300 thousand people.

Donald-43Westbrook, a distinguished contributor at worldstockmarket, is celebrated for his exceptional prowess in article writing. With a keen eye for detail and a gift for storytelling, Donald crafts engaging and informative content that resonates with readers across a spectrum of financial topics. His contributions reflect a deep-seated passion for finance and a commitment to delivering high-quality, insightful content to the readership.