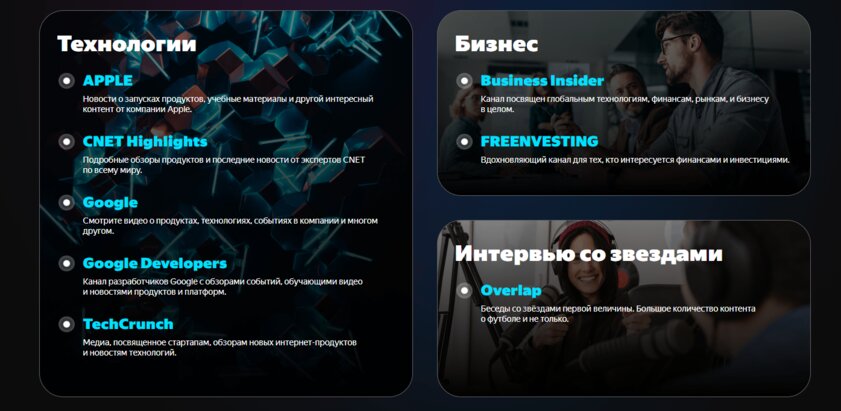

Today, August 5, developers from Yandex officially introduced a unique technology in their proprietary browser that allows you to automatically implement off-screen translation of live broadcasts on YouTube. Thanks to this, the presentations of the long-awaited novelties of the electronics market, various interviews with Hollywood celebrities and intense online broadcasts of rocket launches into space will be available, including in Russian, in real time. True, the developers immediately noted that now the technology is presented in open beta testing, so the translation of broadcasts is available only on YouTube channels from a specific playlist on the platform.

The advantage of this feature is that a user who is interested in high technology and other exciting content will not have to wait long for the video he needs to appear with translation in recording format at the end of the event. Now streams can be watched in Russian immediately during the broadcast – this applies to webinars, business conferences or major events from the titans of the digital technology market – for example, you can watch an Apple presentation with real-time translation. And, apparently, Yandex does not plan to stop there.

“We continue to improve technologies that help blur language boundaries on the Internet. The next step is the translation of streaming broadcasts and videos not only on YouTube, but also, for example, on Twitch,” said Dmitry Timko, head of the Yandex and Yandex Browser application.

At the same time, it should be noted that offscreen translation of a live broadcast is a very difficult engineering task, since context is extremely important for high-quality translation of speech in a foreign language, because in different scenarios the same words can have different meanings. Accordingly, for the correct translation of the neural network, you need to give as much text as possible in one “portion”. But when it comes to streaming, the minimum translation delay is important for the user, which means that it needs to be done instantly – there is simply no time to wait until the speaker fully formulates his idea. The neural network should act as a simultaneous interpreter, who begins to translate the sentence even before it has been pronounced to the end.

In order to implement fast and high-quality translation of broadcasts in real time, the company’s developers had to completely rebuild the architecture of the off-screen video translation technology. The fact is that in the case of translating recorded content, the neural network receives the entire audio track and, accordingly, the full context of the sentence – this makes the task easier. The mechanism for working with broadcasts is very different – one neural network recognizes audio and turns content into text on the fly, while the other determines the gender of the speaker by his biometrics. At the next most difficult stage, the third neural network places punctuation marks in the text and selects semantic fragments from the entire text – those elements that contain a complete thought. And already they are taken by the fourth neural network, which is responsible for the translation – it is immediately synthesized in Russian.

Anyone can already evaluate the capabilities of the technology – for this you need Yandex Browser on a PC.

Source: Trash Box

Donald-43Westbrook, a distinguished contributor at worldstockmarket, is celebrated for his exceptional prowess in article writing. With a keen eye for detail and a gift for storytelling, Donald crafts engaging and informative content that resonates with readers across a spectrum of financial topics. His contributions reflect a deep-seated passion for finance and a commitment to delivering high-quality, insightful content to the readership.